Expert clinicians must stand at the forefront of the artificial intelligence healthcare revolution, according to a joint statement from the SoR, RCR and IPEM.

Released today (5 February), the joint statement calls for a properly trained and funded workforce with clear and consistent regulation across AI developers, healthcare providers, and professionals.

Jointly, the SoR, RCR (Royal College of Radiologists) and IPEM (Institute of Physics and Engineering in Medicine) represent the scientific, clinical and technical professionals responsible for the safe deployment of AI across diagnostic imaging and radiotherapy services.

This statement comes in response to the Medicines and Healthcare products Regulatory Agency (MHRA) consultation on AI regulation in healthcare, which ran from 18 December 2025 to 2 February 2026.

Enhance, not replace

The statement emphasised: “Across our professions, there is clear consensus that AI must enhance, not replace, clinical expertise. Our members deploy and assure AI systems daily and see both their benefits and the risks when evidence, governance or workforce capacity are insufficient.”

Katie Thompson, president of SoR, said: “Radiographers are central to the safe use of AI in imaging and radiotherapy. Regulation must recognise frontline practice and invest in workforce capacity to ensure patient safety.”

With the technology already embedded across imaging and radiotherapy services – and with usage expanding rapidly – regulation must be grounded in clinical practice and reflect patient safety, workforce capacity, and NHS delivery realities.

Regulatory priorities

IPEM, RCR and SoR are aligned on three regulatory priorities:

- End-to-end assurance across the AI lifecycle: Regulation must require proportionate pre-market evidence, transparent communication of limitations and mandatory post-market surveillance to detect performance drift and bias, with clinicians retaining oversight throughout.

- Workforce capacity as a patient safety requirement: Safe AI deployment depends on a trained, resourced workforce. National workforce planning, funded training pathways, recognised roles and protected time must be integral to regulation.

- Clear system-wide accountability: Regulation should be clear on where responsibility lies between manufacturers, healthcare organisations and professionals, including expectations for transparency, training, post-market monitoring and liability.

The government's recently published National Cancer Plan reinforces this urgency, with ambitions such as achieving 75 per cent of cancer patients surviving five years depending on earlier diagnosis, timely treatment and high-quality imaging and radiotherapy services, supported by the safe, evidence-based and regulated deployment of AI.

Safety-critical technology

Dr Stephen Harden, president of the RCR, said: “Clinical radiologists and clinical oncologists see both the promise and risks of AI every day. Regulation must support professional judgement, be underpinned by robust evidence and provide clear accountability.”

Mark Knight, president of IPEM, said: “AI must be regulated as a safety-critical technology. That requires clear standards across the AI lifecycle and a workforce with the capability and authority to assure these systems in clinical practice.”

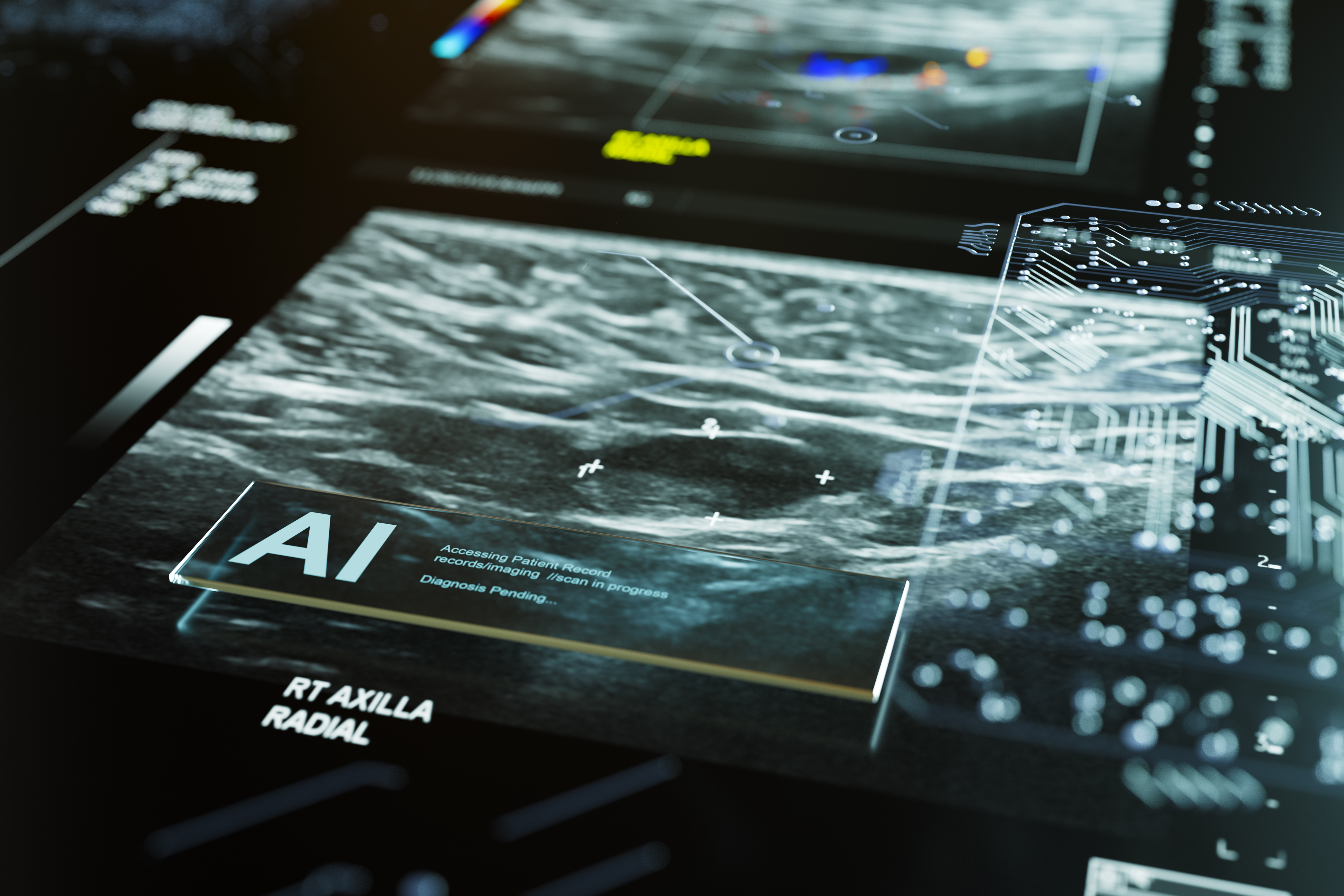

(Image: AI reporting on medical scan, by mphillips007, via Getty Images)